Contributors:

Balakumar Sundaralingam.

Introduction

Optical flow is one of the many ways to detect motion in a video stream. Conversely it can be used to interact with the real 3d world. Exploring this possibility motivated me to see if optical flow from a single camera is good for collision avoidance. The base idea is to have the environment static and the camera moving. This is discussed in the below sections.

Problem Statement

An holonomic ground robot which is equipped with a single camera facing in the forward direction should go forward and in the presence of obstacles, the robot should avoid collisions and continue forward. The collision avoidance must be done only using the on-board camera. The following assumptions are made pertaining to the problem:

The environment is fairly simple mainly made of non-textured surfaces.

The obstacles in the environment are highly textured.

The environment is static and the robot is the only moving object.

Approach

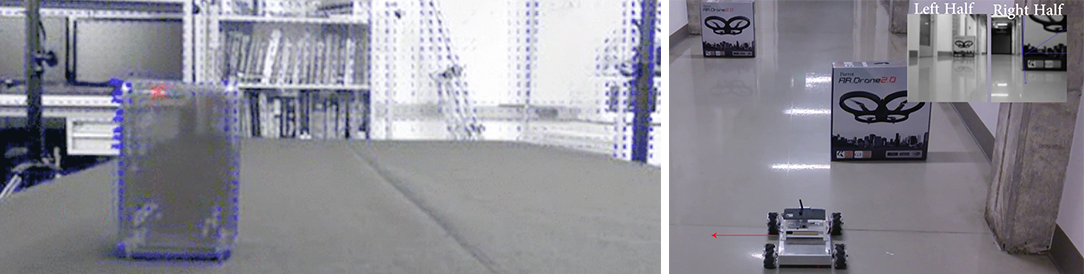

To avoid obstacles with only one camera, many methods are present but to keep it related to the topics taught in class, Optical flow is chosen. Optical flow is detects the direction of motion in a set of images. This is the case if the camera is static and there are dynamic objects in the scene. Conversely if the camera is dynamic and the environment is static, there will be flow vectors throughout the scene. Now in this case, we know that objects close to the camera will have a larger flow vector compared to objects far away. This can be seen in fig.1. This property is used to avoid obstacles.

The optical flow approach used here is similar to Project 4. The difference being in computing only for pixels which are detected as features. Initially, I used the basic approach taught in project 4, but it was very slow as optical flow was being computed for every pixel. To make it real-time, the code should run at least at 20Hz. Optical flow will be faster if it is only used on extracted features. To do this, I had to implement a feature extraction algorithm. Due to lack of knowledge in feature extraction, I used an OpenCV function called “goodFeaturesToTrack” to detect features. Once the features were detected, the following equations are used: $$x_{derivative}=\frac{image_{1}(i-1,j)-image_{1}(i+1,j)}{2}$$ $$y_{derivative}=\frac{image_{1}(i,j-1)-image_{1}(i,j+1)}{2}$$ $$time_{derivative}=image_{1}(i,j)-image_{2}(i,j)$$ where $image_{1}$ and $image_{2}$ are gaussian smoothed consequent frames.i,j are row and column position of the pixels. The above values are used to compute the flow vector using the below formula: $$v=-(A^{T}A)^{-1}A^{T}b$$ where A is a Nx2 matrix with columns as x and y derivatives of the chosen window size(NxN).b is a Nx1 matrix of time derivatives of the chosen window size.v is a 2x1 matrix having velocity components along x and y directions.

With the approach for computing optic flow in a set of images explained,

the next step is to use this method to do collision avoidance.

The maximum vectors from left and right halves are checked with a threshold vector to check if any obstacle is close to the robot and if the robot is on a collision course. The robot motion is chosen by the logic seen in the below table. So the robot is in constant motion as it avoids obstacles. When both halves have velocity vectors greater than the threshold, the robot will halt, but when it halts, the vectors become zero and hence the robot keeps oscillating.

| Greater Than Threshold vector | Robot direction of motion |

|---|---|

| None | Forward |

| Left half Maximum vector | Go right |

| Right half Maximum vector | Go Left |

| Both halves’ Maximum vectors | Stop |

Implementation

The robot has to perform computations in real-time and hence OpenCV libraries are used. To compute matrices for optical flow, the eigen library is used. The processing is all done on-board the robot in a ARM based single board computer called ODROID-U3. It runs a custom version of linux. The Robotics Operating System (ROS) is used as the framework for easy implementation. With all the optimizations done to the best of my knowledge, the optical flow algorithm ran at 50Hz.

Results and Discussion

The approach is tested in a long corridor with minimum textures.The

robot was run in two different setups. The first run is in the presence of two obstacles and the robot

moves left and right to avoid collision as seen below:

Code and Video

The code is done in C++ with eigen and OpenCV libraries. The following inbuilt functions from OpenCV are used: goodFeaturesToTrack(to find features) and GaussianBlur (to blur the image for optical flow computation). No functions from OpenCV for Optical flow was used. The Optical flow code was done manually. The code compiles in Linux and requires ROS hydro or newer frameworks to work.

Source Code:Git

In the video below, the stream from the on-board camera is lagging due to low bandwidth of the wireless network and is not related to the algorithm.

Conclusion

Thus it is seen that optical flow can be used to do collision avoidance if there is enough texture in the obstacles. This can be expanded to use with cheap collision avoidance systems such as in toys.